In the era of information overload, the ability to efficiently organize and analyze data is more crucial than ever. As we delve deeper into the capabilities of artificial intelligence, one of the most compelling advancements is the use of language models to enhance data processing. My recent explorations into creating language model-powered applications have unveiled the remarkable potential of these technologies to transform raw, unstructured data into organized, analyzable information.

The Power of Language Models in Data Labeling

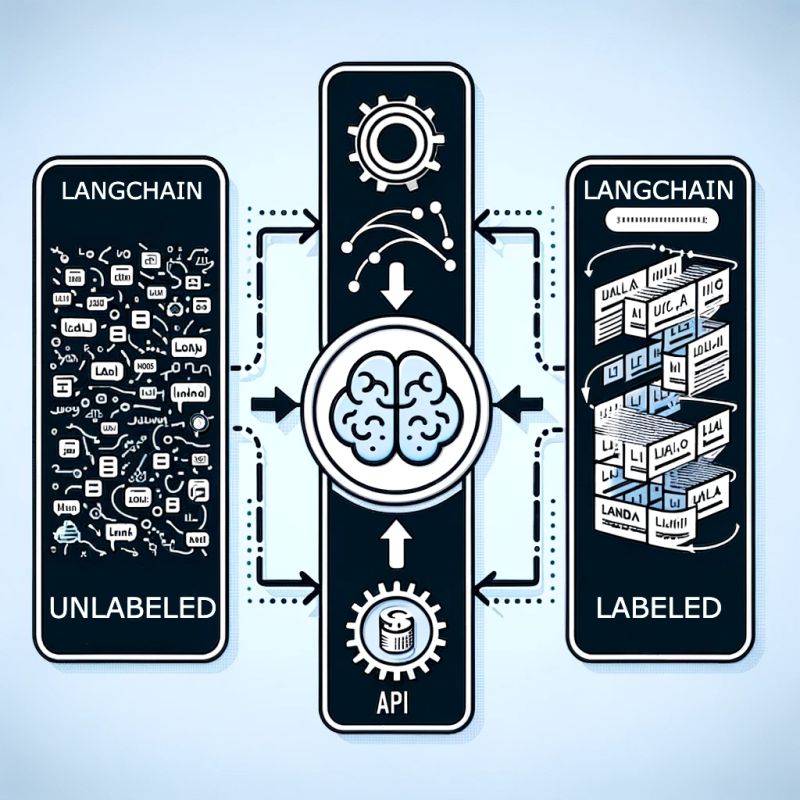

The core of my experimentation has been the innovative use of OpenAI’s APIs and the versatile tool known as LangChain. These technologies have proven instrumental in tackling one of the most persistent challenges in data science: labeling unlabeled data uniformly. The process, which traditionally requires extensive manual effort, can now be significantly automated and refined through strategic prompting and the right set of tools.

Key Insights from Using OpenAI and LangChain

OpenAI’s APIs offer unparalleled capabilities in understanding and generating human-like text. When combined with LangChain, a framework designed to build and deploy language model-powered applications, the potential is limitless. My focus has been on leveraging these technologies to process and label plain text data extracted from hundreds of documents. The results? Coherent, relatable, and structured data sets ready for in-depth analysis.

The Process: Fine-Tuning and Output Parsing

The journey begins with fine-tuning the prompts given to the language models. This step is critical; the quality of the output heavily depends on the specificity and clarity of the prompts. Following this, LangChain’s out-of-the-box chains and output parsers come into play, turning the once daunting task of data labeling into a streamlined and efficient process. The ability to automatically categorize and label vast amounts of text data not only saves time but also opens up new avenues for data analysis that were previously hindered by resource constraints.

The Role of Open-Source Models

In my quest for the most effective solutions, I’ve also ventured into the realm of open-source models. Comparing these to the outputs generated by OpenAI’s APIs has been enlightening. While OpenAI stands out for its accuracy and sophistication, open-source models offer valuable alternatives that can be more accessible and customizable for specific needs. The choice between them depends on the project requirements, including factors like cost, privacy, and the level of control over the data processing pipeline.

Looking Ahead: The Future of Data Analysis

The implications of these developments are profound. As we refine these tools and techniques, the ability to harness AI for data labeling and analysis will become increasingly sophisticated, opening up new possibilities for research, business intelligence, and beyond. The journey of exploring language model-powered applications is just beginning, and the potential applications are as vast as our imagination.

I invite you to share your thoughts, experiences, or any innovative tools you’ve discovered in the realm of AI and data analysis. Let’s delve into the discussions on how these advancements can reshape our approach to data, driving efficiency and insights in ways previously thought impossible.